Motivations

Context

Unlike traditional microphones, which rely on airborne sound waves, body-conduction microphones capture speech signals directly from the body, offering advantages in noisy environments by eliminating the capture of ambient noise. Although body-conduction microphones have been available for decades, their limited bandwidth has restricted their widespread usage. However, thanks to two tracks of improvements, this may be the awakening of this technology to a wide public for speech capture and communication in noisy environments.

Research progress

On the one hand, research development on the physics and electronics part is improving with some skin-attachable sensors. Like previous bone and throat microphones, these new wearable sensors detect skin acceleration, which is highly and linearly correlated with voice pressure. They improve the state of the art by having superior sensitivity over the voice frequency range, which helps improve the signal-to-noise ratio, and also have superior skin conformity, which facilitates adhesion to curved skin surfaces. However, they cannot capture the full bandwidth of the speech signal due to the inherent low-pass filtering of tissues. They are also not yet available for purchase as the manufacturing process needs to be stabilized.

Deep Learning

On the other hand, deep learning methods have shown outstanding performance in a wide range of tasks and can overcome this last drawback. For speech enhancement, works have been able to regenerate mid and high frequencies from low frequencies. For robust speech recognition, models like Whisper have pushed the limits of usable signals.

The need for an open dataset for research purposes

The availability of large-scale datasets plays a critical role in advancing research and development in speech enhancement and recognition using body-conduction microphones. These datasets allow researchers to train and evaluate deep learning models, which have been a key missing ingredient in achieving high-quality, intelligible speech with such microphones. Such datasets are still lacking. The largest is the ESMB corpus, which represents 128 hours of recordings, but only uses a bone microphone. Other private datasets exist, but they are too limited and not open source.

People

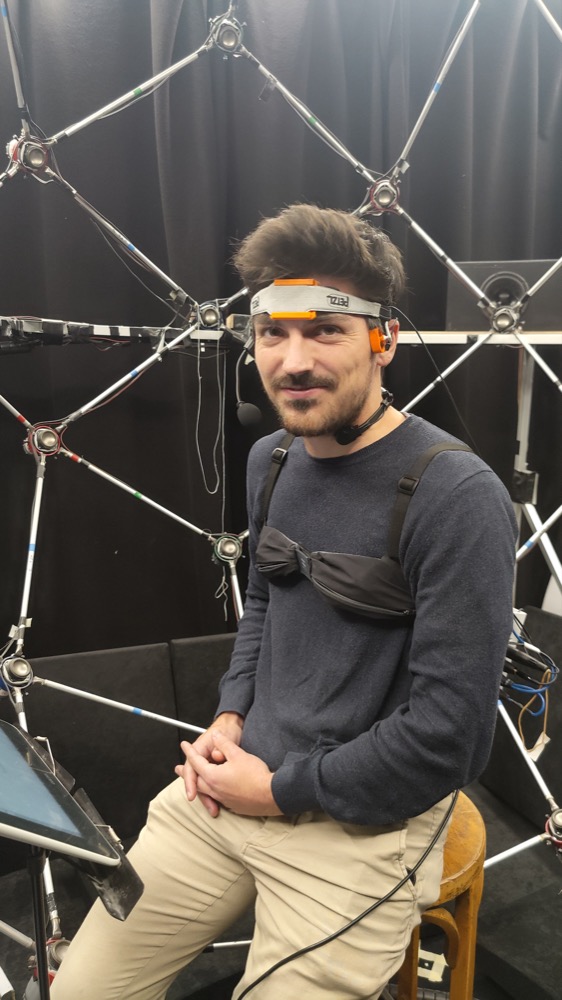

Julien HAURET

Bio

is a PhD candidate at Cnam Paris, pursuing research in machine learning applied to speech processing. He holds two MSc degrees from ENS Paris Saclay, one in Electrical Engineering (2020) and the other in Applied Mathematics (2021). His research training is evidenced by his experiences at Columbia University, the French Ministry of the Armed Forces and the Pulse Audition start-up. Additionally, he has lectured for two consecutive years on algorithms and data structures at the École des Ponts ParisTech. His research focuses on the use of deep learning for speech enhancement applied to body-conducted speech. With a passion for interdisciplinary collaboration, Julien aims to improve human communication through technology.

Role

Co-coordinator of the project. Implemented the recording software and designed the recording procedure. Co-designed the website. Responsible for GDPR compliance. Participated in the selection of microphones. Led the Speech Enhancement task, co-coordinated the Automatic Speech Recognition, and provided support for the Speaker Verification task. Core contributor and manager of participant recording. Oversaw the GitHub project. Co-managed the dataset creation, post-filtering process, and upload to HuggingFace, as well as implemented the retained solution. Responsible for model training on Jean-Zay HPC and their upload/documentation to the Hugging Face Hub. Main contributor to the research article.

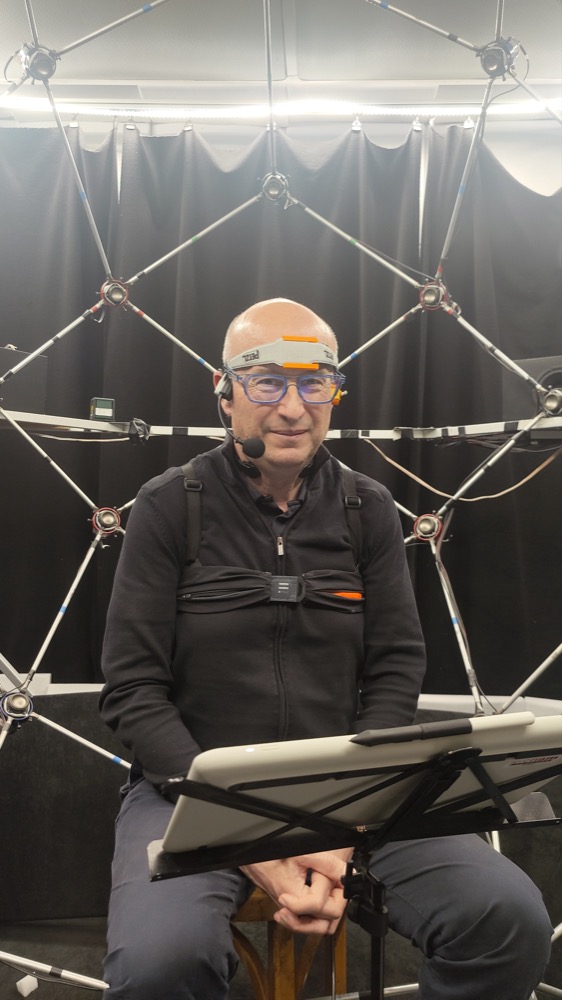

Éric BAVU

Bio

Is a Full Professor of Acoustics and Signal Processing at the Laboratoire de Mécanique des Structures et des Systèmes Couplés (LMSSC) within the Conservatoire National des Arts et Métiers (Cnam), Paris, France. He completed his undergraduate studies at École Normale Supérieure de Cachan, France, from 2001 to 2005. In 2005, he earned an M.Sc in Acoustics, Signal Processing, and Computer Science Applied to Music from Université Pierre et Marie Curie Sorbonne University (UPMC), followed by a Ph.D. in Acoustics jointly awarded by Université de Sherbrooke, Canada, and UPMC, France, in 2008. He also conducted post-doctoral research on biological soft tissues imaging at the Langevin Institute at École Supérieure de Physique et Chimie ParisTech (ESPCI), France. Since 2009, he has supervised six Ph.D. students at LMSSC, focusing on time domain audio signal processing for inverse problems, 3D audio, and deep learning for audio. His current research interests encompass deep learning methods applied to inverse problems in acoustics, moving sound source localization and tracking, speech enhancement, and speech recognition.

Role

Co-coordinator of the project. Responsible for the selection, calibration, and adjustment of the microphones. Co-designed the website. Implemented the backend for sound spatialization. Co-coordinated the Automatic Speech Recognition and the Speaker Verification tasks. Assisted with participant recording. Co-managed the dataset creation, post-filtering process, and upload to HuggingFace. GitHub Contributor. Produced the HuggingFace Dataset card. Main contributor to the research article.

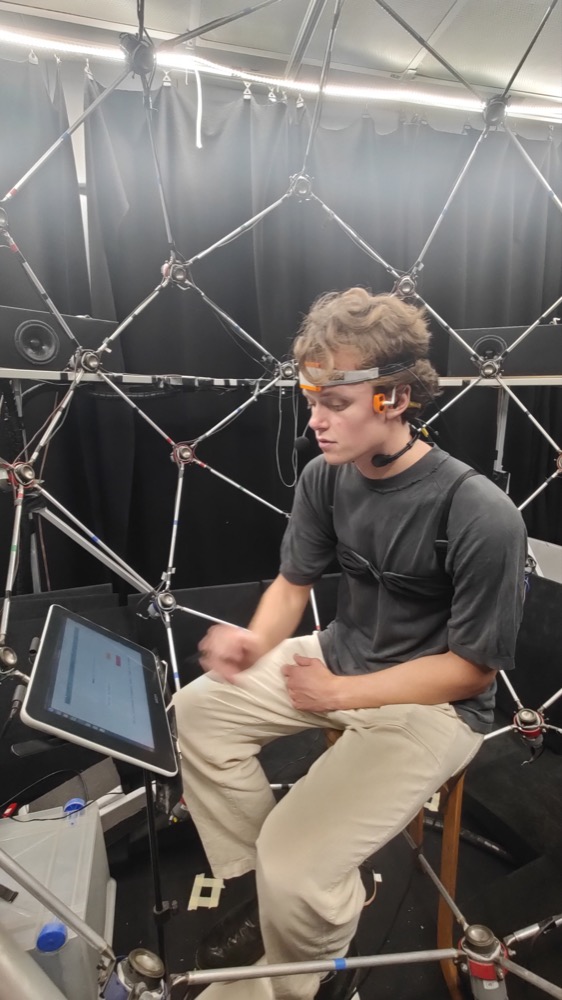

Malo OLIVIER

Bio

Is a engineer-student at INSA of Lyon, that pursued an internship at the Laboratoire de Mécanique des Structures et des Systèmes Couplés (LMSSC) in the Conservatoire National des Arts et Métiers (CNAM), Paris, France. He is following his graduate studies at the Computer Sciences department of INSA Lyon for which he will receive his degree in 2024. Malo has valuable skills implementing different solutions from Information Systems problematics to deep neural networks architectures, including web applications. He foresees to pursue a Ph.D. in Artificial Intelligence, specializing in deep neural networks applied to science domains and hopes his engineer profile highlights his implementing abilities within projects of high interest.

Role

Core contributor to participant recording. Assisted in exploring the Automatic Speech Recognition task, dataset creation, post-filtering process, and upload to HuggingFace. GitHub Contributor. Contributed to the article review process.

Thomas JOUBAUD

Bio

Is a Research Associate at the Acoustics and Soldier Protection department within the French-German Research Institute of Saint-Louis (ISL), France, since 2019. In 2013, he received the graduate degree from Ecole Centrale Marseille, France, as well as the master’s degree in Mechanics, Physics and Engineering, specialized in Acoustical Research, of the Aix-Marseille University, France. He earned the Ph.D. degree in Mechanics, specialized in Acoustics, of the Conservatoire National des Arts et Métiers (Cnam), Paris, France, in 2017. The thesis was carried out in collaboration with and within the ISL. From 2017 to 2019, he worked as a post-doctorate research engineer with Orange SA company in Cesson-Sévigné, France. His research interests include audio signal processing, hearing protection, psychoacoustics, especially speech intelligibility and sound localization, and high-level continuous and impulse noise measurement.

Role

Microphone selection assistance. Co-coordinated the Speaker Verification task. GitHub Contributor. Contributed to the article review process.

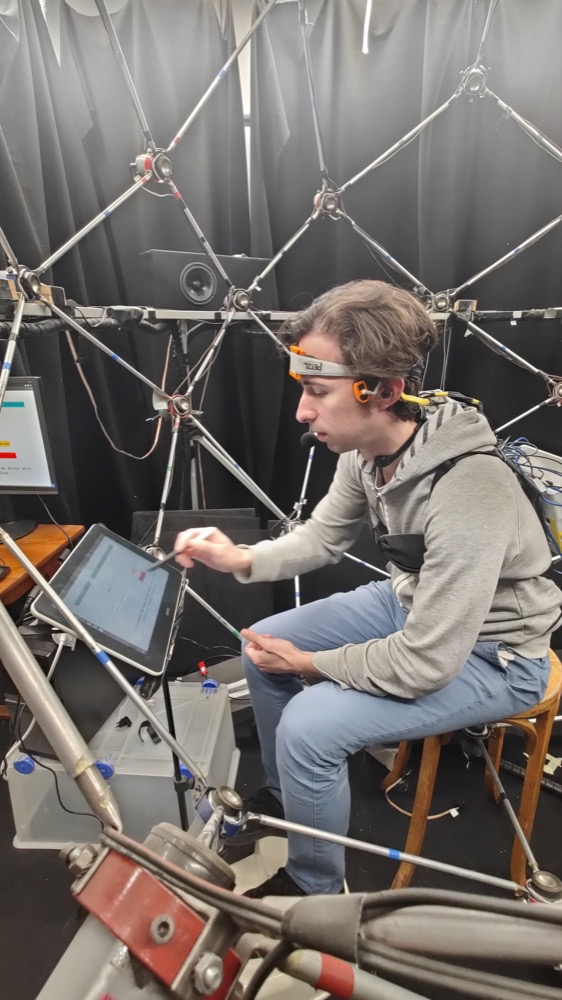

Christophe LANGRENNE

Bio

is a scientific researcher at the Laboratoire de Mécanique des Structures et des Systèmes Couplés (LMSSC) at the Conservatoire National des Arts et Métiers (Cnam), Paris, France. After completing his PhD on the regularization of inverse problems, he developed a fast multipole method (FMM) algorithm for solving large-scale scattering and propagation problems. Also interested in 3D audio, he co-supervised 3 PhD students on this theme, in particular on Ambisonic (recording and decoding) and binaural restitution (front/back confusions).

Role

Participated in the microphone adjustment. Core contributor of participant recording. Contributed to the article review process.

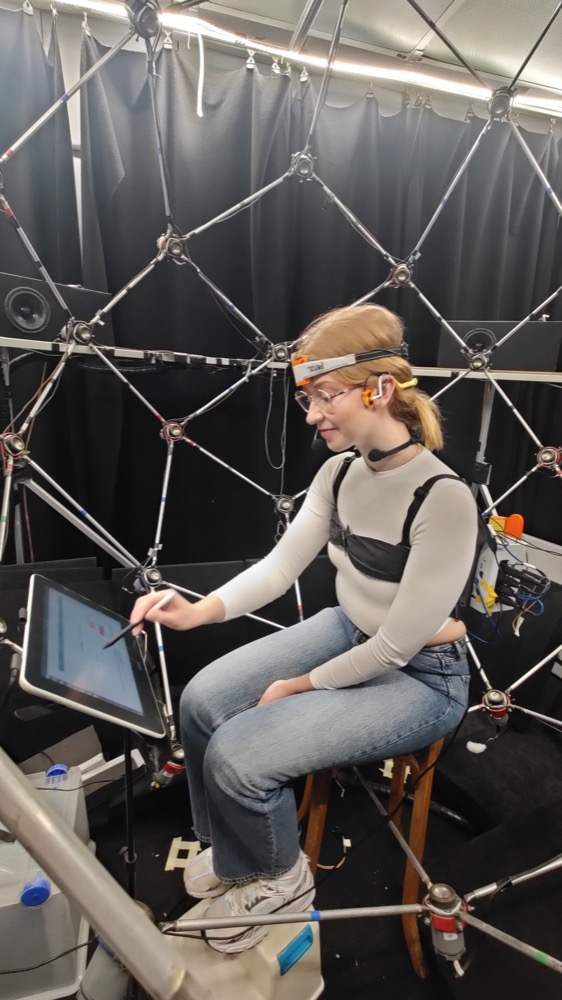

Sarah POIRÉE

Bio

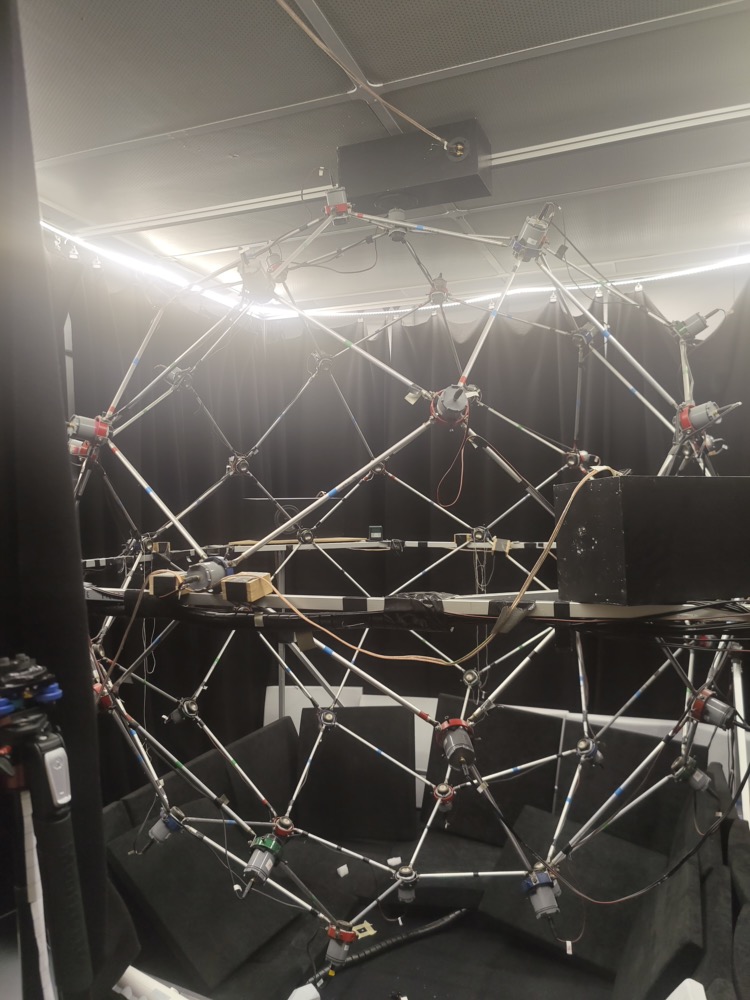

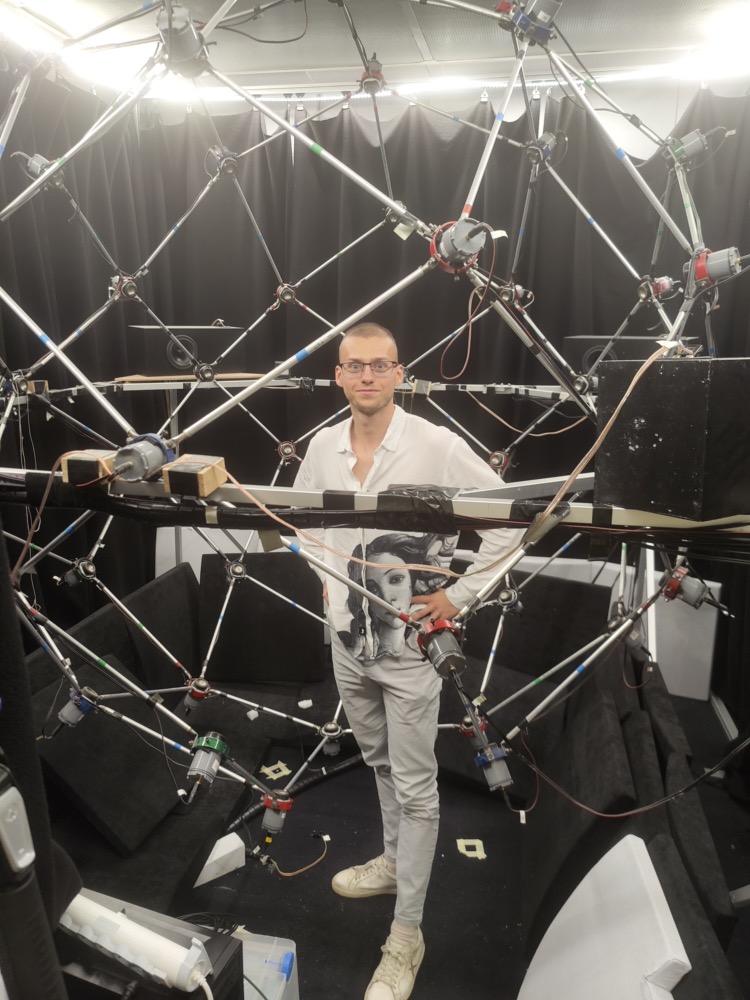

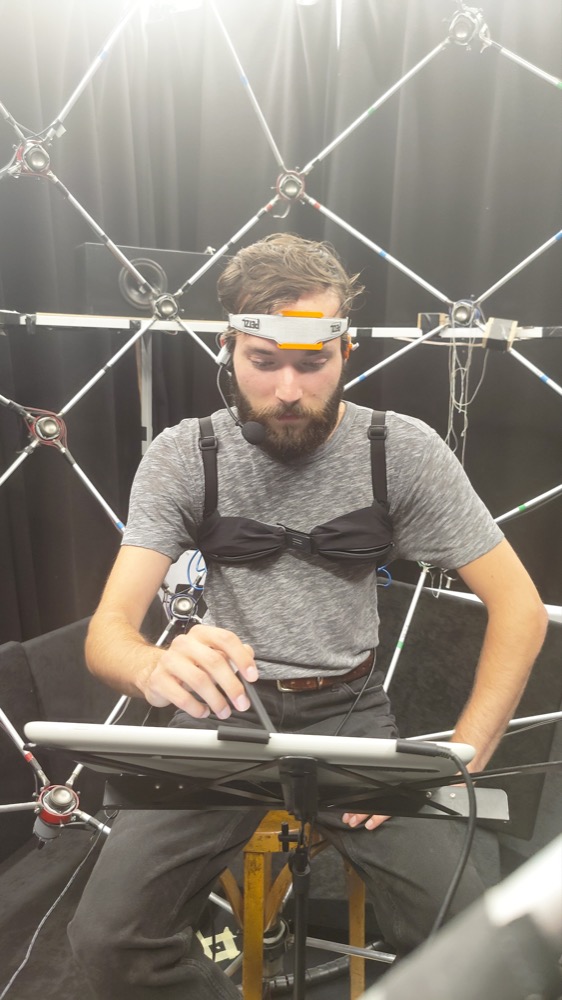

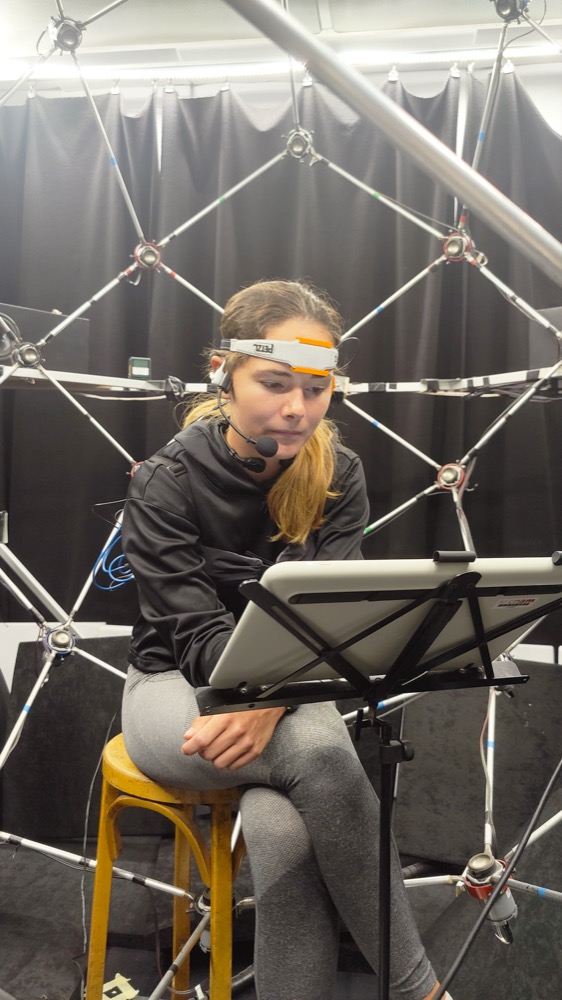

is a technician at the Laboratoire de Mécanique et des Systèmes Couplés (LMSSC) within the Conservatoire National des Arts et Métiers (Cnam), Paris, France. Her activities focus on the design and development of experimental setups. Notably, she contributed to the creation of the 3D sound spatialization system used during the recording of the Vibravox dataset.

Role

Core contributor of participant recording. Contributed to the article review process.

Véronique ZIMPFER

Bio

Is a Scientific Researcher at the Acoustics and Soldier Protection department within the French-German Research Institute of Saint-Louis (ISL), Saint-Louis, France, since 1997. She holds a M.Sc in Signal Processing from the Grenoble INP, France and obtained a PhD in Acoustics from INSA Lyon, France, in 2000. Her expertise lies at the intersection of communication in noisy environments and auditory protection. Her research focuses on improving adaptive auditory protectors, refining radio communication strategies through unconventional microphone methods, and enhancing auditory perception while utilizing protective gear.

Role

Microphone selection assistance. Contributed to the article review process.

Philippe CHENEVEZ

Bio

is an audiovisual and acoustics professional, having graduated from the École Louis Lumière in 1984 with a BTS in audiovisual engineering, and from the CNAM in 1996 with an engineering degree in acoustics. He held the position of Technical Director at VDB from 1990 to 1998, where he specialized in HF and LF electronics, focusing on maintenance and development. In 2006, he founded CINELA, a renowned manufacturer of wind and vibration protection for recording microphones, making a significant contribution to the audiovisual industry with his innovative products.

Role

Responsible for the pre-amplification of the microphones.

Jean-Baptiste DOC

Bio

received the Ph.D. degree in acoustics from the University of Le Mans, France, in 2012. He is currently an Associate Professor with the Laboratoire de Mécanique des Structures et des Systèmes Couplés, Conservatoire National des Arts et Métiers, Paris, France. His research interests include the modeling and optimisation of complex shaped waveguide, their acoustic radiation and the analysis of sound production mechanisms in wind instruments

Role

Participated in the microphone adjustment.