Subsections of Documentation

Hardware

Browse all details of the hardware used for the Vibravox project.

Subsections of Hardware

Audio sensors

Browse all audio sensors used for the Vibravox project.

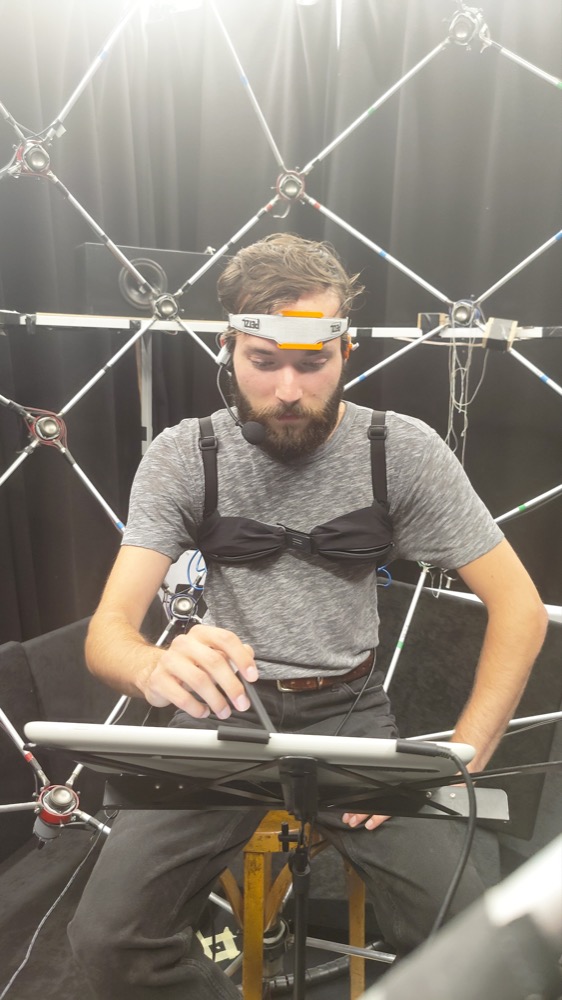

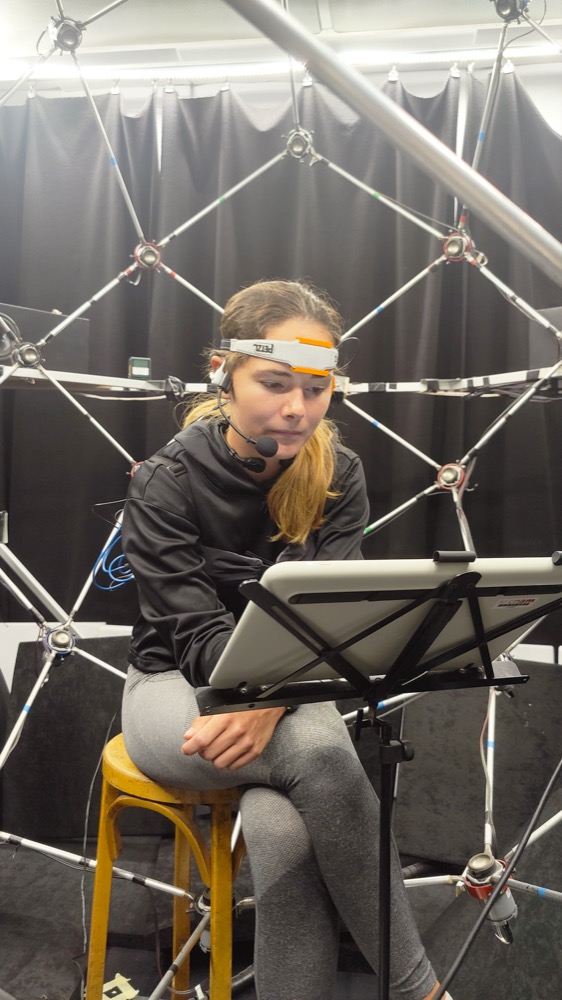

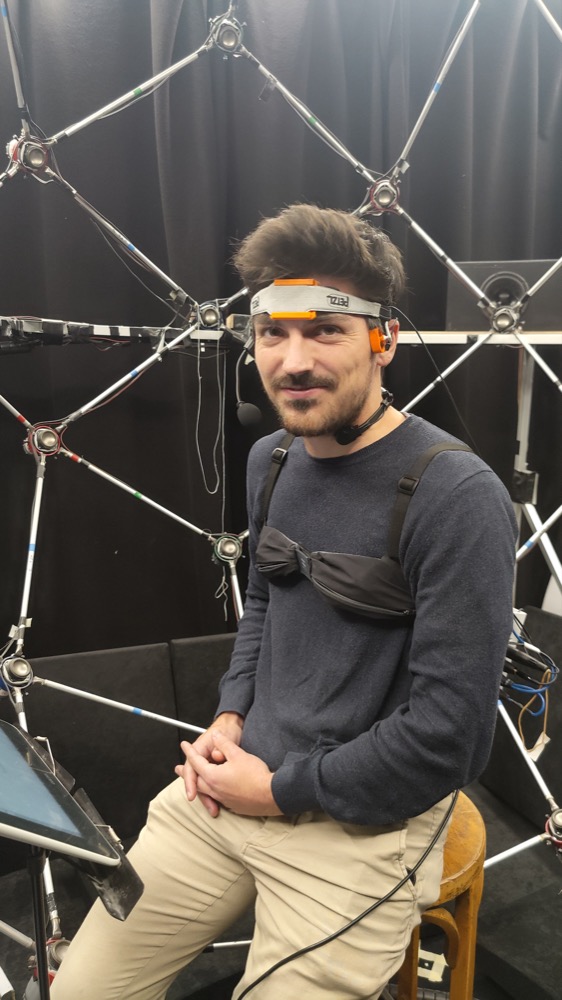

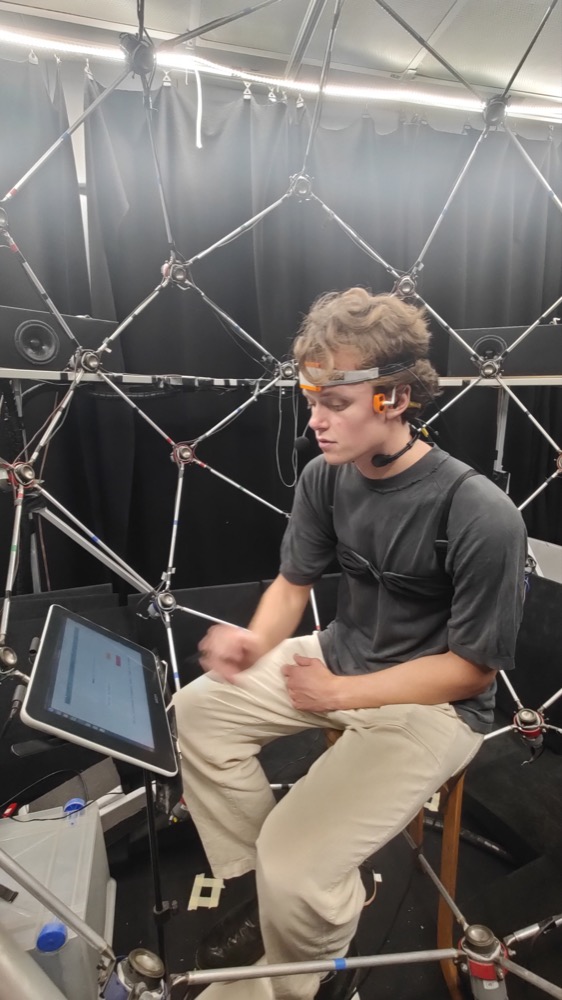

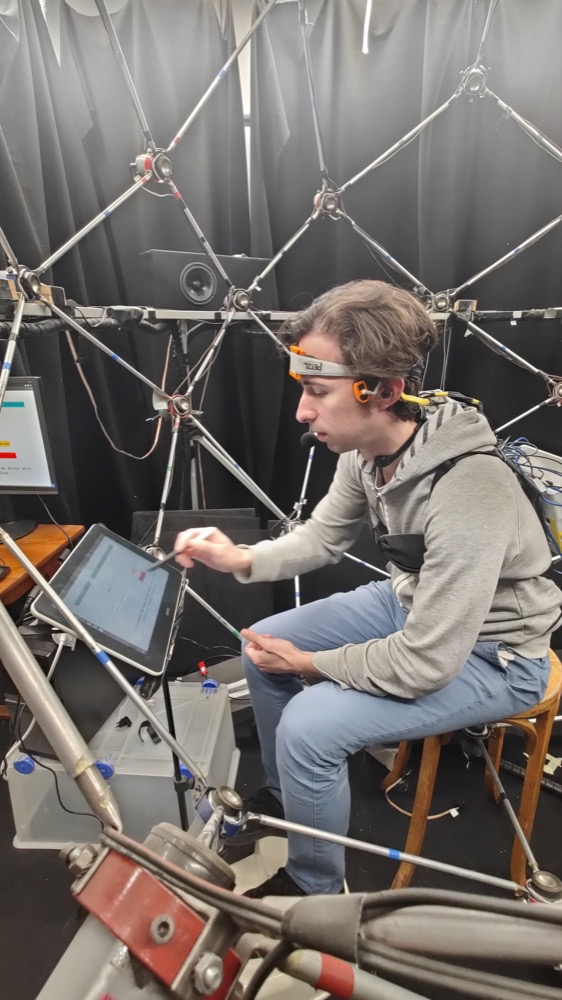

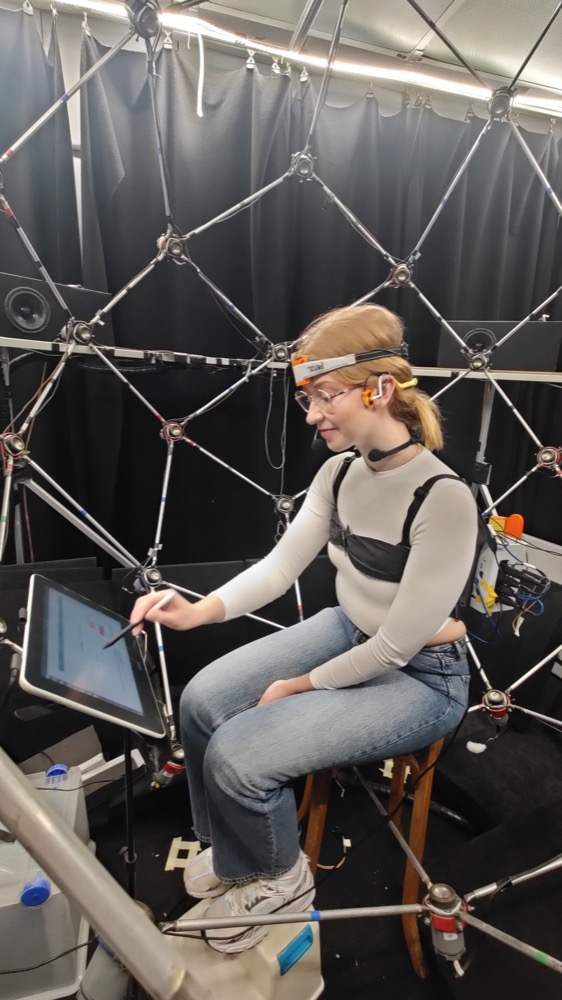

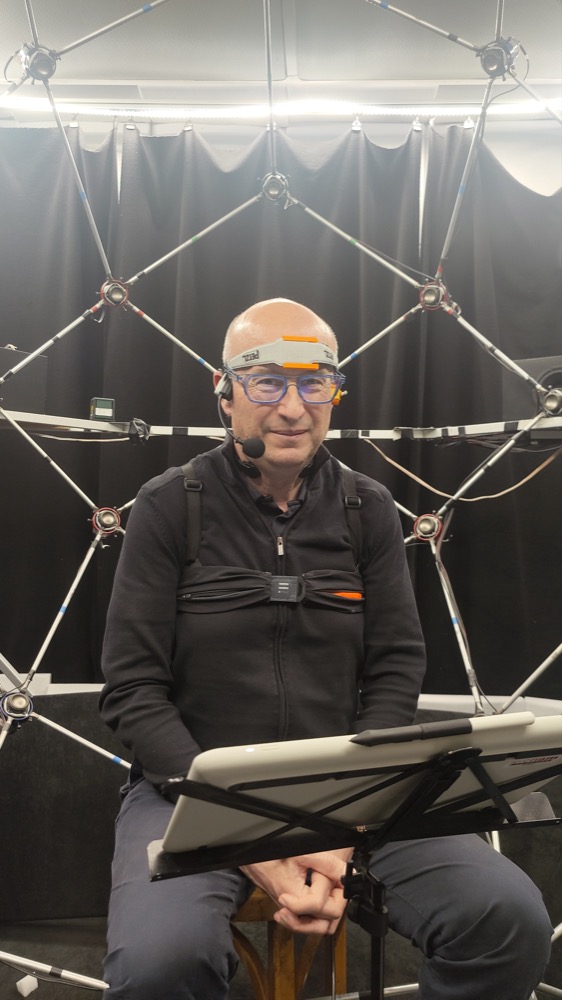

Participants wearing the sensors :

Close up on the sensors :

Subsections of Audio sensors

Reference airborne microphone

Reference

The reference of the air conduction microphone is Shure WH20XLR.

This microphone is available for sale at Thomann. The technical documentation can be found here.

Rigid in-ear microphone

Reference

This rigid in-ear microphone is integrated into the Acoustically Transparent Earpieces product manufactured by the German company inear.de.

Technical details are given in AES publication by Denk et al. : A one-size-fits-all earpiece with multiple microphones and drivers for hearing device research.

For the VibraVox dataset, we only used the Knowles SPH1642HT5H-1 top-port MEMS in-ear microphone, the technical documentation for which is available at Knowles.

Soft in-ear microphone

Reference

This microphone is a prototype produced jointly by the Cotral company, the ISL (Institut franco-allemand de recherches de Saint-Louis) and the LMSSC (Laboratoire de Mécanique des Structures et des Systèmes Couplés). It consists of an Alvis mk5 earmold combined with a STMicroelectronics MP34DT01 microphone. Several measures were taken to ensure optimum acoustic sealing for the in-ear microphone, in order to select the most suitable earmold.

Pre-amplification

This microphone required a pre-amplification circuit.

Laryngophone

Reference

The reference of the throat microphone is Dual Transponder Throat Microphone - 3.5mm (1/8") Connector - XVTM822D-D35 manufactured by ixRadio. This microphone is available for sale on ixRadio.

Forehead accelerometer

Reference

To offer a wide variety of body-conduction microphones, we incorporated a Knowles BU23173-000 accelerometer positioned on the forehead and secured in place with a custom 3D-printed headband.

Pre-amplification

A dedicated preamplifier was developed for this particular sensor.

Hold in position

The designed headband is inspired by a headlamp design. A custom 3D-printed piece was necessary to accommodate the sensor to the headband.

Temple vibration pickup

Reference

The reference of the temple contact microphone is C411 manufactured by AKG. This microphone is available for sale on thomann. It is typically used for string instruments but the VibraVox project will use it as a bone conduction microphone.

Hold in position

This microphone was positioned on the temple using a custom 3D-printed piece. The design of this piece was based on a 3D scan of the Aftershokz helmet, with necessary modifications made to accommodate the sensor with a spherical link.

Recorder

Reference

All of the microphones were connected to a Zoom F8n multitrack field recorder for synchronized recording.

Parameters

| Microphone | Track | Trim (dB) | High-pass filter cutoff frequency (Hz) | Input limiter | Phantom powering |

|---|---|---|---|---|---|

| Temple | 1 | 65 | 20 | Advanced mode | ✅ |

| Throat | 2 | 24 | 20 | Advanced mode | ✅ |

| Rigid in-ear | 3 | 20 | 20 | Advanced mode | ✅ |

| Soft in-ear | 5 | 30 | 20 | Advanced mode | ✅ |

| Forehead | 6 | 56 | 20 | Advanced mode | ✅ |

| Airborne | 7 | 52 | 20 | Advanced mode | ❌ |

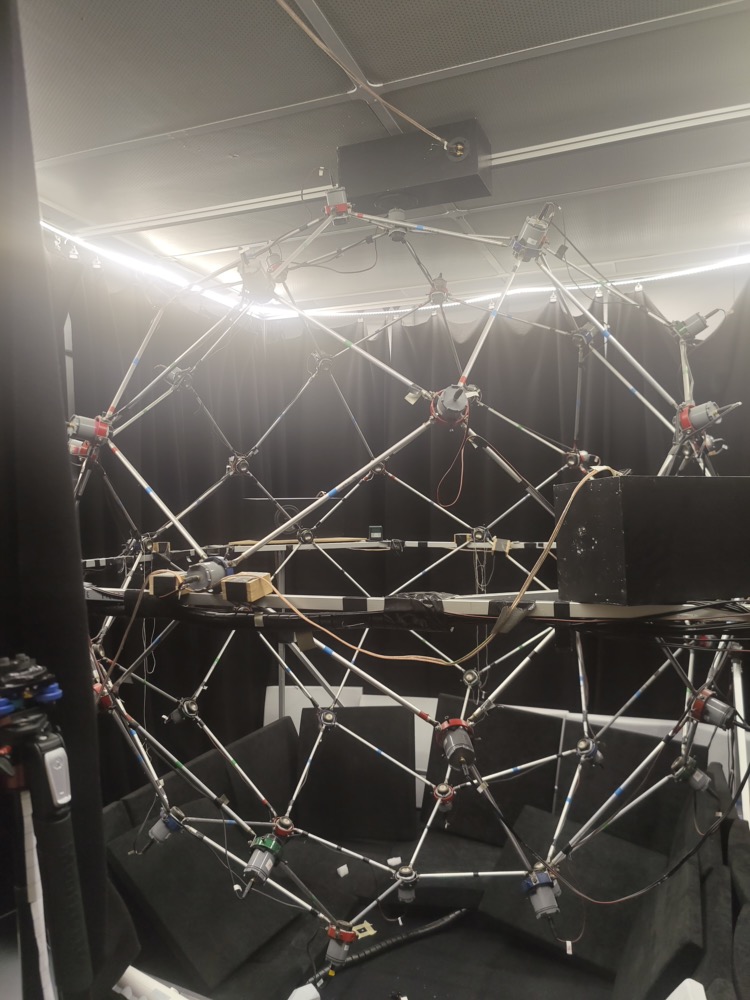

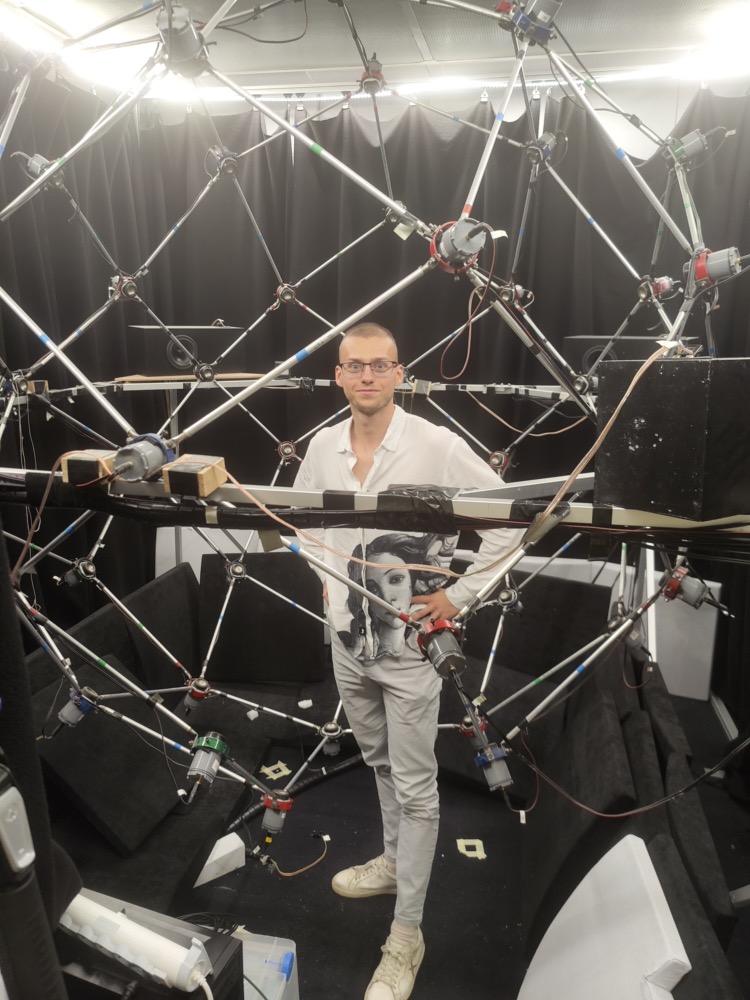

Sound Spatializer

For all the ambient noise samples used in the dataset, the spatialization process was carried out using Spherebedev 3D sound spatialization sphere developed during Pierre Lecomte’s PhD in our lab, and the ambitools library, also developped by Pierre Lecomte during his PhD at Cnam.

The Spherebedev system is a spherical loudspeaker array with a radius of 1.07 meters, composed of 56 loudspeakers placed around the participants. To ensure precise spatialization in the full range of audio, two nested systems were used:

- A low-frequency system with 6 high-performance loudspeakers (ScanSpeak, up to 200 Hz) for accurate bass reproduction.

- A high-frequency system consisting of 50 loudspeakers (Aura, 2 inches, for frequencies above 200 Hz).

The multichannel audio used for higher order ambisonics resynthesis include third-order ambisonic recordings captured using a Zylia ZM-1S microphone, and a fifth-order ambisonic recordings captured with Memsbedev, a custom prototype ambisonic microphone built in our lab at LMSSC.

Software

Browse all details of the software used for the Vibravox project.

Subsections of Software

Frontend

The frontend, built with the tkinter library, consists of 9 sequential windows. The user interface is duplicated on a Wacom tablet used by the participant in the center of the spatialization sphere. Multiple threads were required to allow simultaneous actions, such as updating a progress bar while waiting for a button to be clicked.

Backend

The backend part is comprising:

-

A dynamic reader implemented with the linecache library to avoid loading the entire corpus in memory when getting a new line of text.

-

A cryptography module using cryptography.fernet to encrypt and decrypt the participant identity, necessary to assert the right to oblivion.

-

A ssh client built with paramiko to send instructions to the spatialization sphere when playing the sound, changing tracks, and locating the reading head with jack_transport and ladish_control bash commands.

-

A timer with start, pause, resume and reset methods.

-

A non-bocking streaming recorder implemented with sounddevice, soundfile and queue libraries.

Noise

Noise for the QiN step

After standardizing the sampling frequency, channels and format of the AudioSet files. Single-channel noise is obtained from 32 10-second samples of the following 90 classes:

[‘Drill’, ‘Truck’, ‘Cheering’, ‘Tools’, ‘Civil defense siren’, ‘Police car (siren)’, ‘Helicopter’, ‘Vibration’, ‘Drum kit’, ‘Telephone bell ringing’, ‘Drum roll’, ‘Waves, surf’, ‘Emergency vehicle’, ‘Siren’, ‘Aircraft engine’, ‘Idling’, ‘Fixed-wing aircraft, airplane’, ‘Vehicle horn, car horn, honking’, ‘Jet engine’, ‘Light engine (high frequency)’, ‘Heavy engine (low frequency)’, ‘Engine knocking’, ‘Engine starting’, ‘Motorboat, speedboat’, ‘Motor vehicle (road)’, ‘Motorcycle’, ‘Boat, Water vehicle’, ‘Fireworks’, ‘Stream’, ‘Train horn’, ‘Foghorn’, ‘Chainsaw’, ‘Wind noise (microphone)’, ‘Wind’, ‘Traffic noise, roadway noise’, ‘Environmental noise’, ‘Race car, auto racing’, ‘Railroad car, train wagon’, ‘Scratching (performance technique)’, ‘Vacuum cleaner’, ‘Tubular bells’, ‘Church bell’, ‘Jingle bell’, ‘Car alarm’, ‘Car passing by’, ‘Alarm’, ‘Alarm clock’, ‘Smoke detector, smoke alarm’, ‘Fire alarm’, ‘Thunderstorm’, ‘Hammer’, ‘Jackhammer’, ‘Steam whistle’, ‘Distortion’, ‘Air brake’, ‘Sewing machine’, ‘Applause’, ‘Drum machine’, “Dental drill, dentist’s drill”, ‘Gunshot, gunfire’, ‘Machine gun’, ‘Cap gun’, ‘Bee, wasp, etc.’, ‘Beep, bleep’, ‘Frying (food)’, ‘Sampler’, ‘Meow’, ‘Toilet flush’, ‘Whistling’, ‘Glass’, ‘Coo’, ‘Mechanisms’, ‘Rub’, ‘Boom’, ‘Frog’, ‘Coin (dropping)’, ‘Crowd’, ‘Crackle’, ‘Theremin’, ‘Whoosh, swoosh, swish’, ‘Raindrop’, ‘Engine’, ‘Rail transport’, ‘Vehicle’, ‘Drum’, ‘Car’, ‘Animal’, ‘Inside, small room’, ‘Laughter’, ‘Train’]

This represents 8 hours of audio.

Loudness normalization

from ffmpeg_normalize import FFmpegNormalize

normalizer = FFmpegNormalize(normalization_type="ebu",

target_level = -15.0,

loudness_range_target=5,

true_peak = -2,

dynamic = True,

print_stats=False,

sample_rate = 48_000,

progress=True)

normalizer.add_media_file(input_file='tot.rf64',

output_file='tot_normalized.wav')

normalizer.run_normalization()Spatialization

The direction of sound is sampled uniformly on the unit sphere using the inverse cumulative distribution function.

Noise for the SiN step

The noise used for the final stage of the recording was captured with a ZYLIA ZR-1 Portable. It consists of applause, demonstrations and opera for a total of 2h40.

Recording protocol

Procedure

The recording process consists of four steps:

-

Speak in Silence: For a duration of 15 minutes, the participant reads sentences sourced from the French Wikipedia. Each utterance generates a new recording and the transcriptions are preserved.

-

Quiet in Noise: During 2 minutes and 24 seconds, the participant remains silent in a noisy environment created from the AudioSet samples. These samples have been selected from relevant classes, normalized in loudness, pseudo-spatialized and are played from random directions using a spatialization sphere equipped with 56 loudspeakers. The objective of this phase is to gather realistic background noises that will be combined with the Speak in Silence recordings to maintain a clean reference.

-

Quiet in Silence: The procedure is repeated for 54 seconds in complete silence to record solely physiological and microphone noises. These samples can be valuable for tasks such as heart rate tracking or simply analyzing the noise properties of the various microphones.

-

Speak in Noise: The final phase (54 seconds) will primarily serve to test the different systems (Speech Enhancement, Automatic Speech Recognition, Speaker Identification) that will be developed based on the recordings from the first three phases. This real-world testing will provide valuable insights into the performance and effectiveness of these systems in practical scenarios. The noise was recorded using the ZYLIA ZR-1 Portable Recorder from spatialized scenes and replayed in the spatialization sphere with ambisonic processing.

Post-processing

Please refer to the Vibravox paper for more information on the dataset curation.

Analysis

Coherence functions

The coherence functions of all microphones are shown in the figure below during an active speech phase.

Consent form

Ensure compliance with GDPR

A consent form for participation in the VibraVox dataset has been drafted and approved by the Cnam lawyer. This form mentions that the dataset will be released under the Creative Commons BY 4.0 license, which allows anyone to share and adapt the data under the condition that the original authors are cited. All Cnil requirements have been checked, including the right to oblivion. This form must be signed by each VibraVox participant.

The consent form

The voice recordings collected during this experiment are intended to be used for research into noise-resilient microphones, as part of the thesis project of Mr. Julien HAURET, PhD candidate at the Conservatoire national des arts et métiers (Cnam) (julien.hauret@lecnam.net).

The purpose of this form is to obtain the consent of each of the participants in this project to the collection and storage of their voice recordings, necessary for the production of the results of this research project.

The recordings collected will be anonymized and shared publicly at vibravox.cnam.fr under a Creative Commons BY 4.0 license, it being understood that this license allows anyone to share and adapt your data.

This processing of personal data is recorded in a computerized file by Cnam.

The data may be kept by Cnam for up to 50 years.

The recipients of the data collected will be the above-mentioned doctoral researcher and his thesis supervisor, Mr. Éric Bavu.

In accordance with the General Data Protection Regulation EU 2016/679 (GDPR) and laws no. 2018-493 of June 20, 2018 relating to the protection of personal data and no. 2004-801 of August 6, 2004 relating to the protection of individuals with regard to the processing of personal data and amending law no. 78-17 of January 6, 1978 relating to data processing, you have the right to access, rectify, oppose, delete, limit and port your personal data, i.e. your voice recordings.

To exercise these rights, or if you have any questions about the processing of your data under this scheme, please contact vibravox@cnam.fr. Although your right to be forgotten remains applicable for the duration of the data retention period, we advise you to exercise this right before the final publication of the database, which is scheduled for 01/10/2023.

If, after contacting us, you feel that your rights have not been respected, you can contact the Cnam-établissement public data protection officer directly at ep_dpo@lecnam.netonmicrosoft.com. You can also lodge a complaint with Cnil.